|

2. Near-duplicate video

A significant amount of user-generated video content is

available on social media applications, such as ¡®YouTube¡¯ and ¡®LiveLeak¡¯.

Since digital video content can be easily edited and redistributed,

websites for video sharing commonly suffer from a high number of

duplicates (identical copies) and near-duplicates (copies that were the

subject of at least one transformation) [1]. The detection of duplicates and

near-duplicates can limit redundancy when presenting video search results

(e.g., by grouping duplicates and near-duplicates in the retrieval

results). Further, the detection of duplicates and near-duplicates is

important for the protection of intellectual property (IP).

• Near-duplicates video is approximately identical

videos

–

photometric

variations

•

e.g.,

change of color and lighting

–

editing

operations

•

e.g.,

insertion of captions, logos, and borders

–

speed

changes

•

e.g.,

e.g., addition or removal of frames

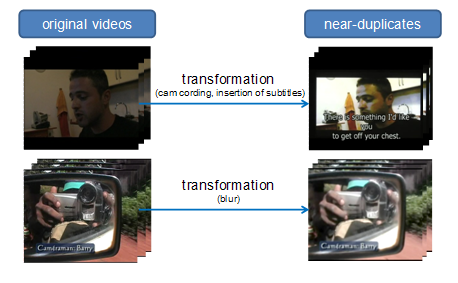

• Examples

of Near-Duplicates

Fig. 1 Examples of Near-Duplicates

3. Video signature

The performance of video copy detection techniques is

dependent on the representation of a video segment with a unique set of

features. This representation is commonly referred to as a video

signature. Video signatures need to be robust with respect to significant

spatiotemporal transformations. Also, video signatures need to allow for

efficient matching between a query video and video segments in the reference

video database.

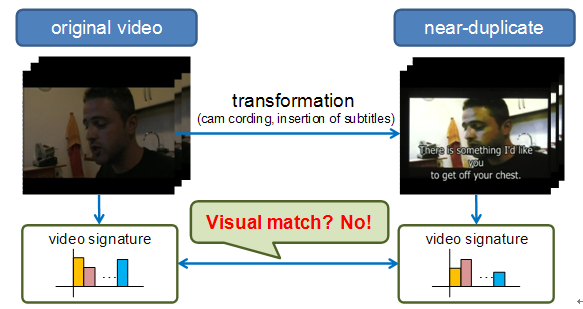

Video signatures are often created by extracting low-level

visual features from video frames. The extracted low-level visual

features may for instance describe color, motion, the spatial

distribution of intensity information, or interest points. However, it is

well-known that video signatures using low-level visual features are

highly sensitive to spatiotemporal transformations. This implies that

video signatures using low-level visual features do not perform well for

the purpose of detecting near-duplicates. Fig. 2 illustrate the problem

of video signatures using low-level visual features

Fig. 2. Use of Low-level Visual Features for Creating a

Video Signature

4. Semantic Video Signature Creation

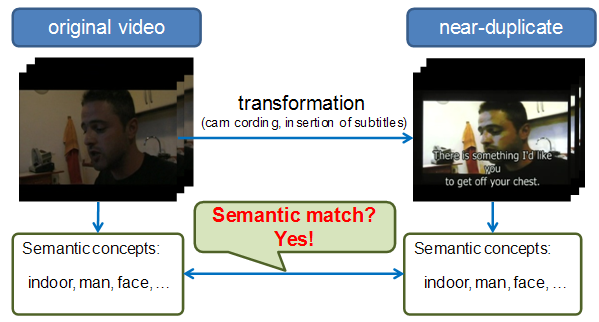

Although near-duplicates may have been the subject of

several spatial and temporal transformations, they essentially do not

contain any new or modified semantic information. In other words,

although near-duplicates may have significantly modified low-level

features (e.g., color manipulation), they still convey the same

information from a high-level point-of-view (e.g., a scene depicting a

beach). Therefore, it is worth investigating whether the detection of

near-duplicates can be realized by relying on the semantic information

conveyed by the video sequence. Consequently, taking into account the

observation that the presence of semantic information is generally

unaffected by low-level modifications of the video content, we propose a

new video copy detection method that makes use of semantic information in

order to construct a video signature.

Fig. 3. Use of Low-level Visual Features for Creating a

Video Signature

Automatic detection of semantic concepts has already been

extensively investigated. Conventional methods for semantic concept

detection classify video clips into several predefined concepts. Based on

the semantic annotations that are the result of semantic concept

detection, users are then able to find video sequences that contain the predefined

concepts. However, many video sequences exist that have similar semantic

concepts. Moreover, the number of semantic concepts used in automatic

concept detection is limited. Therefore, only using the number of

semantic concepts for the purpose of detecting video copies is

insufficient due to a lack of discriminative power.

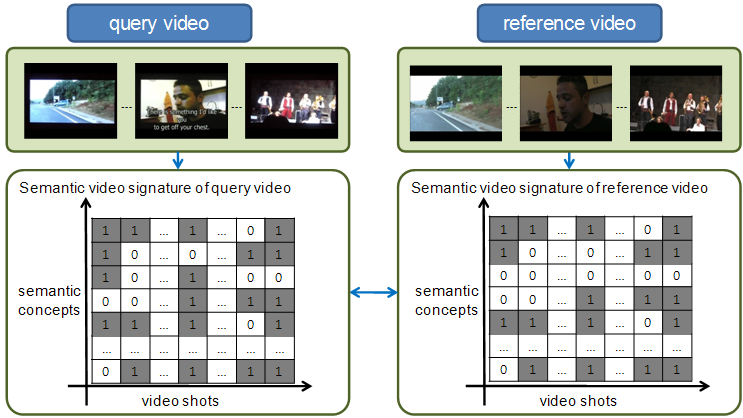

To resolve the above problems, we propose a video sequence

matching algorithm based on the detection of a number of popular semantic

concepts along the temporal axis. Although many video sequences exist

that contain similar semantic concepts, and despite the fact that the

number of semantic concepts used is limited, the temporal pattern of the

semantic concepts is different from video sequence to video sequence. The

number of semantic concepts used in our research is 34, including

concepts such as ¡®road¡¯, ¡®sand¡¯, and ¡®snow¡¯. These concepts typically

represent background information (i.e., general concepts); they typically

do not represent objects in the scene (i.e., specific concepts). The

variation in terms of background information is usually smaller than the

variation in terms of the objects appearing in a scene, intuitively

leading to detection rates that are more stable. It should be clear that

we treat the problem of video copy detection as a semantic concept

sequence matching problem. Therefore, as our matching process is based on

the use of semantic concepts, it is less sensitive to variation in terms

of low-level features.

Fig.

4. Semantic Video Signature Creation

5. Signature Matching and Copy Detection

Video copy detection aims at determining whether a given

query video sequence appears in a target video sequence, and if so, at

what location. In a next step, similarity is measured between a query

video sequence and target video sequences stored in a database. By

matching the semantic signature of the different video sequences, we are

able to measure the similarity between a query video sequence and the

target video sequences. Using the outcome of the similarity measurement,

the last step determines whether the input video is a near-duplicate.

Specifically, if the similarity is higher than a predefined threshold, then

the query video sequence is regarded as a near-duplicate of the target

video sequence.

|